API Architecture for Massive Scale

A little background

I like to save time and just dive into the details, but in this case I think a little context helps inform some of the design decisions that were taken.

It was 2020, we were all in the early throes of a global pandemic, and my career continued down a path working for a string of large media companies. In parallel, US politics were heating up in what would be one of the wildest Presidential Election years this country had seen.

As a contractor for NBC Universal, I was building data pipelines to migrate their media catalog into the cloud for international syndication. With the death toll rising from COVID-19, I found myself needing to find a full-time position to provide healthcare for my family, and that’s how I found myself working for CNN as Principal Architect in the Digital Politics Group.

It felt like a dream job, working alongside teams of brilliant minds, both technically and in terms of US Politics (which are way messier than I could have possibly imagined).

No matter the party line they walk, lots of folks consume CNN Politics data when the election cycle comes through. On top of that, between foreign actors and the “fake news” rhetoric of the time, our APIs had giant targets on their backs.

Anyhow, we were charged with the important mission of providing election results to the global CNN audience. Between viewership being at all time highs thanks to the lockdowns, and an ultra-mega-charged political climate, we had to build a system that had would inform the electorate and failure was not an option.

To make things more interesting, I had less two months to design, build, and deploy this critical system into production ahead of the 2020 election!

Design considerations

- High write throughput

- Volatile data that can update every second during the event, or not for days-months during the officiating process (and all the recounts…).

- Very high read throughput (billions and billions served)

- Many different views of the datasets (transforms, aggregates, etc…)

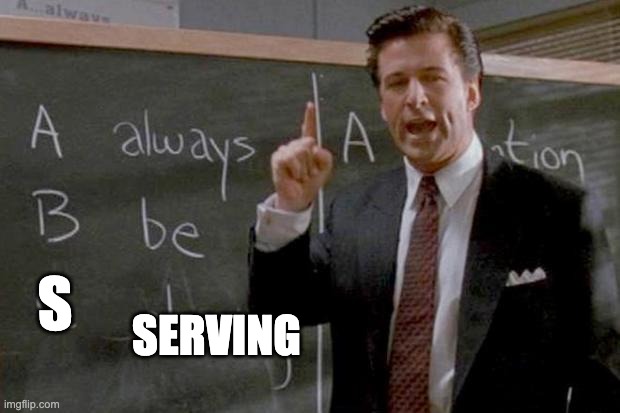

- Always be available

Traditional approaches, and why they wouldn’t work

Consider the above representation of a typical API backed by some sort of persistence layer. Often we have data that is either normalized across several tables in a *SQL technology or across several document types when it comes to noSQL. The main difference there is where the joins happen.

Typical failure modes and undesirable traits for this design are:

- The data layer

- Are read/write nodes provisioned to match the required load?

- Deadlocking on writes?

- Replication lag

- Unnecessary writes for unchanged data

- Access patterns

- How much compute is required to assemble the desired response (over and over even if the data hasn’t changed)

- Spaghetti monster of URLs vs data shapes

- Fail over/DR

- How many hot/hot stacks do you need to ensure global stability?

While this pattern is great for many use cases, I couldn’t guarantee we’d be able to scale.

With all the data composition happening during the request/response cycle, there’s a lot of compute going on, and most of the time the underlying

data hasn’t changed since the last query.

We could mitigate a bit of that inefficiency of composition by adding a cache layer as illustrated above. The problem with caching is finding the ideal balance between keeping requests efficient, while also ensuring we don’t serve stale data. Remember, we are already under the fake news microscope, which meant we needed to be even more vigilant on data integrity.

Another concern with traditional designs are data hotspots.

Some data might either be fairly static (so very little writes), or requested less frequently than other datasets (relatively few reads).

In these scenarios, you can wind up with an area of your API that suffers a performance degradation due to other areas eating up resources.

A microservice pattern can help this by allowing each resource API scale independently. This doesn’t address all of our scale or DR concerns though, it really just distributes the problem.

GraphQL can also help with the composition problem (URL/route sprawl)… but the problem with graphQL is that the only fallback for graphQL … is more graphQL.

Reactive to the rescue

It’s funny how certain patterns seem to repeat. From the early days of my career integrating robotic systems with real time synchronization, to cloud scale collection and distribution of valuable datasets and media assets, event driven architecture almost always wins out.

Taking event driven architecture a little further, implementing Flow Based Programming solves a lot of our problems by allowing our data to change and flow independently of the consumption rate.

That’s the most important takeaway of this reactive pattern: isolating data volatility from consumption levels.

Breaking it down, we really have three loosley-coupled components in this pattern.

Intelligent writes + CDC

Here we have one or more data sources as events. We might be polling an API, receiving events through some sort of broker, or batch processing.

To prevent unnecessary compute and IO downstream, we compute a hash of our data, and only update the event record IF the hash has changed since the last write.

This keeps our CDC (change data capture) output stream clean and only outputs “real” changes before pushing on to the message bus.

With this optimization, you can get closer to “exactly once” processing, but can run into another issue of data starvation that we can talk about in another article.

Transform reactively

Now that we have a source of events to efficiently react to, the next layer of this pattern is to transform only when the data changes. To draw a direct connection between this approach vs the traditional design is that we’re creating all of our derivative datasets as soon as the underlying data changes rather than composing these datasets each time an uncached request comes in.

This completely decouples our write patterns from our read requirements.

In practice, this means that for each different shape of data we need, we want to listen to events that might be part of that data and recalculate appropriately.

As with most things in software development, this has already been done. We can use a tried and trued pattern: the content based router.

This powerful pattern allows discreet components in your modular, decoupled system to react only to very specific data. As an example, you might want to create a new dataset for vote data only for Senate races where the leading candidate is a woman.

The content based router allows you to dig into the data event and decide whether it is something to respond to.

Another benefit to this design is that you can create new datasets independently and scale as needed.

You can also see that we’re treating transforms/aggregations the same way as our initial sources, allowing for chain reactions!

Distribution

Wrapping the other components up in a way to produce tangible value is our API layers.

In a large org, you might have several verticals that are interested in shared datasets, but you also may have requirements to keep those verticals isolated in the cloud.

By allowing API stacks to also react via the content based router, you can let each part of the business scale as needed. This also simplifies cost attribution by federating the data, while allowing individual groups to serve from their own cloud accounts.

Here we can see that each API instance can subscribe to specific datasets, and since all the permutations have already been calculated by reacting to actual changes, we can distill our access pattern down to a couple of API routes.

The main route pattern used to serve resources is:

/{resource-category}/{resource-type}/{resource-key}

Some examples could be:

/weather/humidity/US.json/weather/humidity/US-GA.json/covid19/reported-cases/NYC.json/results/county-races/2022-AG-CO.json

If you look closely, you can see this is really just a file path. This means we can write the same data as our super fast compute+redis to an object store such as AWS S3, or its counterparts in Azure/GCP.

That last part is what helped me sleep at night, knowing that even if our API went down, we could still serve static assets.

Due to things like “eventual consistency” associated with blob storage,

we might be in a position where data isn’t quite as fresh as what’s being served from the compute based API, but we’re still able to tell our story.

Oh, one more thing

One capability that falls out almost for free is being able to reactively notify external systems when your data changes.

This is super helpful if say… you are behind a global CDN and you want to invalidate cache on data as soon as it’s refreshed.

You can imagine there are lots of other use cases where knowing when specific data is very valuable to an organization.

Imagine using a chatbot to let teams know when specific, laser targeted scenarios happen so they can take action immediately.

In what was one of the craziest weeks of this developer’s career(and crash course into US Politics!), this design paid off and we were able to serve billions of requests worth of accurate election data to our audiences. This event yielded two of the highest traffic days of viewership in CNN’s 40+ years.

While I was behind the design and much of the implementation of this pattern, it could never have happened without one of the greatest teams I’ve had the honor to work with, the folks at CNN Digital, Politics. ❤️

Until next time…